Scrape Discogs Marketplace with Python: A Step-By-Step Tutorial

Online marketplaces are beloved for offering a wide array of goods, often from things we don’t need to those we didn’t know we needed. Among them, Discogs stands out as a premier platform for music enthusiasts and collectors of vinyl, CD, cassette, and other types of records. In essence, Discogs for music records is what IMDb is for film. Whether you’re exploring music market trends, tracking the value of vinyl records, or gathering data for a personal project, all roads lead to Discogs.

What is Discogs?

Launched in 2000, Discogs.com (named after "discographies") is a vital resource for music enthusiasts and record collectors. It offers comprehensive tools to explore artists’ discographies, organize collections, and build extensive catalogs.

Initially a database of information about audio recordings, in 2005, Discogs launched its marketplace, which unites a global community of music fans, collectors, and sellers, helping each other discover and share music.

Today, Discogs boasts an extensive discography database, with over 16 million recorded releases, 8 million artist discographies, and 2 million record labels. Moreover, the platform features interesting resources such as a community forum, user reviews, and indicators showing how many people own a particular record or have it on their wishlist. This vast repository of music data makes Discogs truly invaluable for music lovers.

Why scrape Discogs?

With over 62 million items from thousands of sellers, Discogs hosts the go-to online shop for vinyl, CDs, and cassettes of popular new releases, rare collectible finds, and everything in between. Their marketplace is built on an extensive database, allowing sellers to list their inventory effortlessly and enabling buyers to find the exact version they want.

By scraping the Discogs marketplace, you can gain detailed insights and access a wealth of discography information that can be used for various purposes, from market analysis to personal collection management.

Market analysis

Scraping data from Discogs lets you gain a comprehensive understanding of trends in vinyl record prices and demand. This data can help identify which records are increasing in value, track the popularity of specific genres or artists, and even forecast future market trends. For businesses and individual sellers, this insight is invaluable for making decisions about buying and selling records.

Inventory management

Real-time tracking of record availability and pricing is crucial for both sellers and serious collectors. Scraping Discogs allows you to monitor inventory levels, compare prices across different sellers, and ensure that you are always aware of the current market conditions. This can help you make strategic decisions about when to buy or sell records to maximize profits or build a more valuable collection.

Personal collection insights

For collectors, it’s satisfying to maintain an accurate list of your records. Scraping Discogs can automate the process of cataloging your discography, ensuring you have the most current information about each item’s market value. This not only helps in managing your collection more effectively but also provides a clear picture of its overall worth, which is useful for insurance purposes or when considering selling a part of your collection.

Price comparison

Scraping data from multiple listings on Discogs enables detailed price comparisons. This can help buyers find the best deals and sellers to set competitive prices. By understanding the price variations and the factors influencing them, you’ll make informed purchasing decisions.

Trendspotting

Analyzing scraped data can reveal emerging trends in the music industry. For instance, you might identify an increasing interest in a particular genre or a rise in the value of records from certain artists. This can inform your purchasing decisions, marketing strategies, and overall business approach.

Data-driven decisions

Having access to a large dataset from Discogs allows for more robust data analysis. Whether you’re running a record store, managing an online shop, or simply a passionate collector, data-driven decisions can enhance your strategies and support more effective decision-making in pricing strategies, stock management, and marketing campaigns.

How to scrape the Discogs marketplace

Discogs provides an official API to extract discography and certain other data. Nevertheless, using a custom script offers more flexibility in terms of the specific data you can collect. On top of that, custom scripts can bypass rate limits imposed by the API, allowing for more extensive and continuous data collection.

To scrape data from the Discogs marketplace, you’ll need a script that navigates the website, extracts the relevant information, and stores it in a usable format. So, let’s go ahead and build a Discogs marketplace scraper!

Prepare the environment & import libraries

First things first, ensure you’ve got a coding environment where you can write and execute scripts. You could use Jupyter Notebook, an IDE like Visual Studio Code, or a simple text editor and terminal.

Next, download Python, if you haven’t already, and our main library for this code Selenium, which is a powerful tool for controlling web browsers through programs and automating browser tasks. It’s widely used for web scraping because it can interact with dynamic content on web pages. You can install Selenium with Python's package installer. Simply open your terminal and run the following command.

pip install selenium

Once installed, you'll also need to download the WebDriver for the browser you want to automate. For example, if you're using Chrome, download ChromeDriver. Make sure to include the ChromeDriver location in your PATH environment variable.

You’re now ready to import the libraries we’ll use for this script. Selenium’s webdriver library provides the By class that helps locate elements on a webpage, WebDriverWait and expected_conditions or EC help manage dynamic content by setting a waiting time. Aside from that, the math library will be handy for numerical operations when dealing with pagination. Finally, the csv library will be useful for storing the scraped data in a CSV file.

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.support.ui import WebDriverWaitfrom selenium.webdriver.support import expected_conditions as ECimport mathimport csv

Why proxies?

As with all eCommerce platforms, if you try scraping Discogs without proxies, you’ll likely get your IP banned. Websites often employ anti-bot protection, so any automated requests could be met with restrictions. Therefore, it’s better to be safe than sorry and set up some proxies in our code.

To begin, head over to the Smartproxy dashboard. From there, select a proxy type of your choice: residential, mobile, datacenter, or static residential (ISP) proxies.

For Discogs, we recommend our residential proxies because of their superior authenticity, high success rate (99.68%), rapid response time (<0.5s), and vast geo-targeting possibilities (195+ locations). Here’s how easy it is to buy a plan and get your proxy credentials:

- Find residential proxies by navigating to Residential under the Residential Proxies column on the left panel, and purchase a plan that best suits your needs.

- Open the Proxy setup tab.

- Configure the proxy parameters according to your needs. Set the location and session type.

- Copy your proxy address, port, username, and password for later use, or you can click the download icon in the right corner under the table to download the proxy endpoints (10 by default).

For this use case, we’ll use the USA location with a sticky session of 1 minute, so our proxy address is us.smartproxy.com, port 10001, the password is generated automatically, and the username is adjusted with "user-" added at the beginning and "-sessionduration-1" at the end.

Integrate proxies

To integrate proxies, you’ll need to use an extension by creating two files and zipping them together. Copy the content below to a text editor and save it as "background.js". It will configure the proxy settings and handle authentication. Don’t forget to replace the "<PROXY>", "<PORT>", "<USER>", and "<PASSWORD>" placeholders with your information.

var config = {mode: "fixed_servers",rules: {singleProxy: {scheme: "http",host: "<PROXY>",port: parseInt(<PORT>)},bypassList: ["foobar.com"]}};chrome.proxy.settings.set({value: config, scope: "regular"}, function() {});function callbackFn(details) {return {authCredentials: {username: "<USER>",password: "<PASSWORD>"}};}chrome.webRequest.onAuthRequired.addListener(callbackFn,{urls: ["<all_urls>"]},['blocking']);

Then, copy the content below and save it as "manifest.json" to create a file that describes the Chrome extension and its permissions. You won’t have to replace any placeholders here.

{"version": "1.0.0","manifest_version": 2,"name": "Chrome Proxy","permissions": ["proxy","tabs","unlimitedStorage","storage","<all_urls>","webRequest","webRequestBlocking"],"background": {"scripts": ["background.js"]},"minimum_chrome_version":"22.0.0"}

After that, zip both of these files to "proxy.zip". You’ll then be ready to integrate proxies by adding an extension and specifying the file path to the zip file. On macOS, to get the path to the file, you can right-click on the file, hold the Option key, and select "Copy proxy.zip as Pathname". On Windows, you can do that by right-clicking on the file, holding the Shift key, and selecting "Copy as path".

# Setup Chrome options with proxyoptions = webdriver.ChromeOptions()options.add_extension("/path/to/your/destination/proxy.zip")driver = webdriver.Chrome(options=options)

Testing the proxy

This step is optional, but to ensure that the proxy is working properly, you can set up a test URL to visit a website (like https://ip.smartproxy.com/json) that will print your IP address and other identifying data in the terminal. Use this full code for testing purposes.

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.support.ui import WebDriverWaitfrom selenium.webdriver.support import expected_conditions as ECimport mathimport csv# Setup Chrome options with proxyoptions = webdriver.ChromeOptions()options.add_extension("/path/to/your/destination/proxy.zip")driver = webdriver.Chrome(options=options)# Test URL to check the proxytest_url = "https://ip.smartproxy.com/json"driver.get(test_url)# Print the proxy check webpagepage_text = driver.find_element(By.TAG_NAME, 'body').textprint(page_text)print(f"\n")

Define the Discogs marketplace target

There are a couple of types of Discogs marketplace pages. Choose the version-generic type if you want to find various versions of a release. However, if you want to find only a particular version of a release, go for the version-specific type. Here’s how to find them:

- Version-generic type. To find a marketplace page that offers various versions of a release, go to a specific release page and click on the number of copies available under the "For sale on Discogs" section on the right-hand side. Your URL will then be structured like "discogs.com/sell/list…" Here’s the URL of the version-generic marketplace page of the album Pygmalion by Slowdive: https://www.discogs.com/sell/list?master_id=9482&ev=mb.

- Version-specific type. To find a marketplace page that offers only a specific version of a release, go to a release page, scroll through the version list below, click on the one you’re interested in, and then click on the number of copies available under the "For sale on Discogs" section on the right side of the page. Your URL will be structured like "discogs.com/sell/release…" Here’s the marketplace URL of the specific Pygmalion vinyl record released in 2012 by the label Music On Vinyl: https://www.discogs.com/sell/release/5297158?ev=rb.

With our script, you’ll be able to easily scrape whichever type of Discogs marketplace target you prefer. However, in the following code, we shall use the version-specific URL as an example.

# Discogs marketplace URL to scrapeurl = "https://www.discogs.com/sell/release/5297158?ev=rb"

Define and add custom headers and cookies

Browsers send headers in their web requests to identify you to the web server, providing details about your computer, browser version, content requirements, and several other parameters. By including headers in the request, you'll mimic the behavior of real web browsers, helping you avoid detection and blocks while web scraping. So, with headers, your requests will appear more natural to the web server. Let’s use these three:

- User-Agent. Identifies the browser, version, and operating system. This makes your request look like it’s coming from a specific browser.

- Accept-Language. Specifies the preferred language for the response.

- Referer. Indicates the last webpage the user was on, which included a link to the target page, helping to simulate natural browsing behavior.

Let’s indicate a Chrome browser, a Macintosh computer running macOS, compatibility with Mozilla and Safari browsers, etc. You can also specify your preferred language for the content. In this case, it’s English (US). Last, you can indicate the previous webpage you supposedly were on before making the request, so that the target website trusts the request as if it came from a real browser. After that, add the headers to your Selenium requests using the provided method.

# Define custom headersheaders = {"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36","Accept-Language": "en-US","Referer": "https://www.discogs.com/"}# Open the initial URL to set the correct domaindriver.get(url)

Next, the two custom cookies you can add to your request are the currency preference (in this case, USD) and continuous session setting, which reduces the likelihood of detection and blocks. Please note that the scraped Discogs marketplace results might still show prices in currencies other than the one defined by the cookies, as the displayed currency depends on the seller’s location and your proxy location.

# Add custom cookiescookies = [{"name": "currency", "value": "USD", "domain": ".discogs.com"},{"name": "__hssrc", "value": "1", "domain": ".discogs.com"}]for cookie in cookies:driver.add_cookie(cookie)# Add custom headersdriver.execute_cdp_cmd('Network.setExtraHTTPHeaders', {'headers': headers})

Accept the target website’s cookies

Since Discogs prompts the cookies consent banner, you must take care of it before scraping the website’s data. This part of the code automates the acceptance of cookies by waiting for the consent button to become clickable and then clicking it, allowing your script to proceed without interruptions.

You can adjust the maximum amount of time that the browser instance waits for the button to be available by increasing the number "10" (seconds) to something else. Keep in mind that the webpage loading time could vary due to your system’s loading speed and proxies, as the proxy connection speed depends on the distance between you, the proxy location, and the target’s server location.

# Function to accept cookiesdef close_cookies_banner(driver):try:cookies_button = WebDriverWait(driver, 10).until(EC.element_to_be_clickable((By.ID, "onetrust-accept-btn-handler")))cookies_button.click()except Exception:pass # Continue execution even if the cookies banner is not found

Get pagination

There may be multiple pages of listings that you wish to scrape. Therefore, it’s important for your script to log the number of total pages. Here’s where our math library comes into play: instead of relying on the website to show the total number of pages (since the version-generic marketplace pages don’t display the total count), the script calculates the total number of pages based on the total number of items and the default value of 25 items per page.

# Function to get total number of pagesdef get_total_pages(driver):try:pagination_text = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, '#pjax_container > nav > form > strong'))).texttotal_items = int(pagination_text.split()[-1].replace(',', ''))items_per_page = 25total_pages = math.ceil(total_items / items_per_page)return total_pagesexcept Exception as e:print(f"Error extracting total pages: {e}")return 1 # Default to 1 page if extraction fails

Remove blank lines

Let’s insert a piece of code to help us extract elements with blank lines in the middle of the result. This function cleans up a text block by removing any blank lines, ensuring that only meaningful lines are retained.

# Function to remove blank lines from textdef remove_blank_lines(text):return "\n".join([line for line in text.split("\n") if line.strip() != ""])

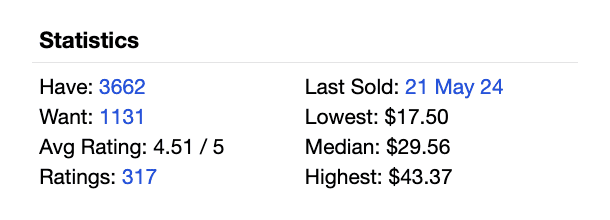

Extract record statistics

User statistics on Discogs are immensely valuable. They indicate how many users own this version of the record, how many want it, its average rating score, the number of ratings it has received, when the last record of this version was sold, the lowest price it was ever sold for, the median price, and the highest price it was ever sold for. Please note that the version-generic Discogs marketplace pages don’t display these statistics. Here’s the function that allows you to scrape the statistics element.

# Function to scrape statisticsdef scrape_statistics(driver):try:statistics_element = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, '#statistics > div.section_content.toggle_section_content')))statistics_text = statistics_element.text.strip()print("Statistics:\n")print(statistics_text)print("\n")return statistics_textexcept Exception:return None

Define scraping logic and print the data in the terminal

Here comes one of the most important parts of the script, which defines the scraping logic. The script is instructed to wait for the page to fully load, extract listing data (such as the item name, price, shipping cost, condition, and seller information), print the data in the terminal, and more. The data being scraped is targeted using CSS selectors, which you can find by inspecting the HTML of the webpage.

The scrape_page function is designed to automate the extraction of item data from a webpage. It uses a web driver to navigate through different pages and retrieve necessary details about items listed on the page. The function takes three parameters: the web driver instance, the current page number being scraped, and a list to store the scraped data.

# Function to scrape a pagedef scrape_page(driver, page_number, scraped_data):print(f"Scraping page {page_number}")try:# Wait for the page to load the itemsWebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, '#pjax_container > table > tbody > tr > td.item_description > strong > a')))# Scrape the dataitem_elements = driver.find_elements(By.CSS_SELECTOR, '#pjax_container > table > tbody > tr')for item in item_elements:

Once the page is loaded and the items are detected, the function retrieves all item elements from the page using the specified CSS selector. Each item element is then processed individually to extract various details. A nested try-except block ensures that if there’s an error in extracting data for a specific item, the program will catch the exception and print an error message without terminating the entire scraping process. This robust error handling allows the scraper to continue working even if some items on the page have missing or malformed data.

After extracting all relevant data for an item, the function prints the collected information in a readable format. This is useful for debugging and monitoring the scraping process in real time. By printing details, you can verify that the scraper is functioning correctly and capturing the expected data.

try:item_data = {}item_data['Item'] = item.find_element(By.CSS_SELECTOR, 'td.item_description > strong > a').textitem_data['Price'] = item.find_element(By.CSS_SELECTOR, 'td.item_price.hide_mobile > span.price').text.strip()shipping_element = item.find_elements(By.CSS_SELECTOR, 'td.item_price.hide_mobile > span.hide_mobile.item_shipping')item_data['Shipping'] = shipping_element[0].text.strip() if shipping_element else 'N/A'total_price_element = item.find_elements(By.CSS_SELECTOR, 'td.item_price.hide_mobile > span.converted_price')item_data['Total Price'] = total_price_element[0].text.strip() if total_price_element else 'N/A'condition_element = item.find_elements(By.CSS_SELECTOR, 'td.item_description > p.item_condition')item_data['Condition'] = remove_blank_lines(condition_element[0].text) if condition_element else 'N/A'seller_element = item.find_elements(By.CSS_SELECTOR, 'td.seller_info > ul > li > div > strong > a')item_data['Seller'] = seller_element[0].text.strip() if seller_element else 'N/A'rating_element = item.find_elements(By.CSS_SELECTOR, 'td.seller_info > ul > li > strong')item_data['Seller Rating'] = rating_element[0].text.strip() if rating_element else 'N/A'total_rating_element = item.find_elements(By.CSS_SELECTOR, 'td.seller_info > ul > li > a')item_data['Seller Total Ratings'] = total_rating_element[0].text.strip() if total_rating_element else 'N/A'# Check for new sellernew_seller_element = item.find_elements(By.CSS_SELECTOR, 'td.seller_info > ul > li > span')if new_seller_element and 'New Seller' in new_seller_element[0].text:item_data['Seller Rating'] = 'New Seller'item_data['Seller Total Ratings'] = 'N/A'print(f"\n"f"Item: {item_data['Item']}\n"f"Price: {item_data['Price']}\n"f"Shipping: {item_data['Shipping']}\n"f"Total Price: {item_data['Total Price']}\n"f"Condition: {item_data['Condition']}\n"f"Seller: {item_data['Seller']}\n"f"Seller's Rating: {item_data['Seller Rating']}\n"f"Seller's Total Ratings: {item_data['Seller Total Ratings']}\n")scraped_data.append(item_data)except Exception as e:print(f"Error extracting data for an item on page {page_number}: {e}")except Exception as e:print(f"Error while scraping page {page_number}: {e}")

Scrape record statistics and ask for user input

Up until now, you’ve been defining and setting up things in your code. Now, it’s time for some actual scraping. This section of the code is responsible for scraping the statistics data, determining total pages, asking for user input on how many pages you wish to scrape, then iterating through pages to gather data, and closing the browser once the job is done.

# Initialize lists to store scraped data and statisticsscraped_data = []statistics_text = Nonetry:# Open the initial URL to set the correct domaindriver.get(url)# Try to close cookies banner, if presentclose_cookies_banner(driver)# Scrape statistics on the first pagestatistics_text = scrape_statistics(driver) # Get the total number of pages available for scrapingtotal_pages = get_total_pages(driver)print(f"Total pages: {total_pages}")print(f"\n")# Ask the user how many pages they want to scrapenum_pages_to_scrape = int(input(f"How many pages do you want to scrape (1-{total_pages})? "))if num_pages_to_scrape > total_pages:print(f"You've requested more pages than available. Scraping {total_pages} pages instead.")num_pages_to_scrape = total_pages# Loop through the specified number of pages and scrape data from eachfor page_number in range(1, num_pages_to_scrape + 1):current_url = f"{url}&page={page_number}" if page_number > 1 else urldriver.get(current_url)scrape_page(driver, page_number, scraped_data)finally:# Close the browserdriver.quit()

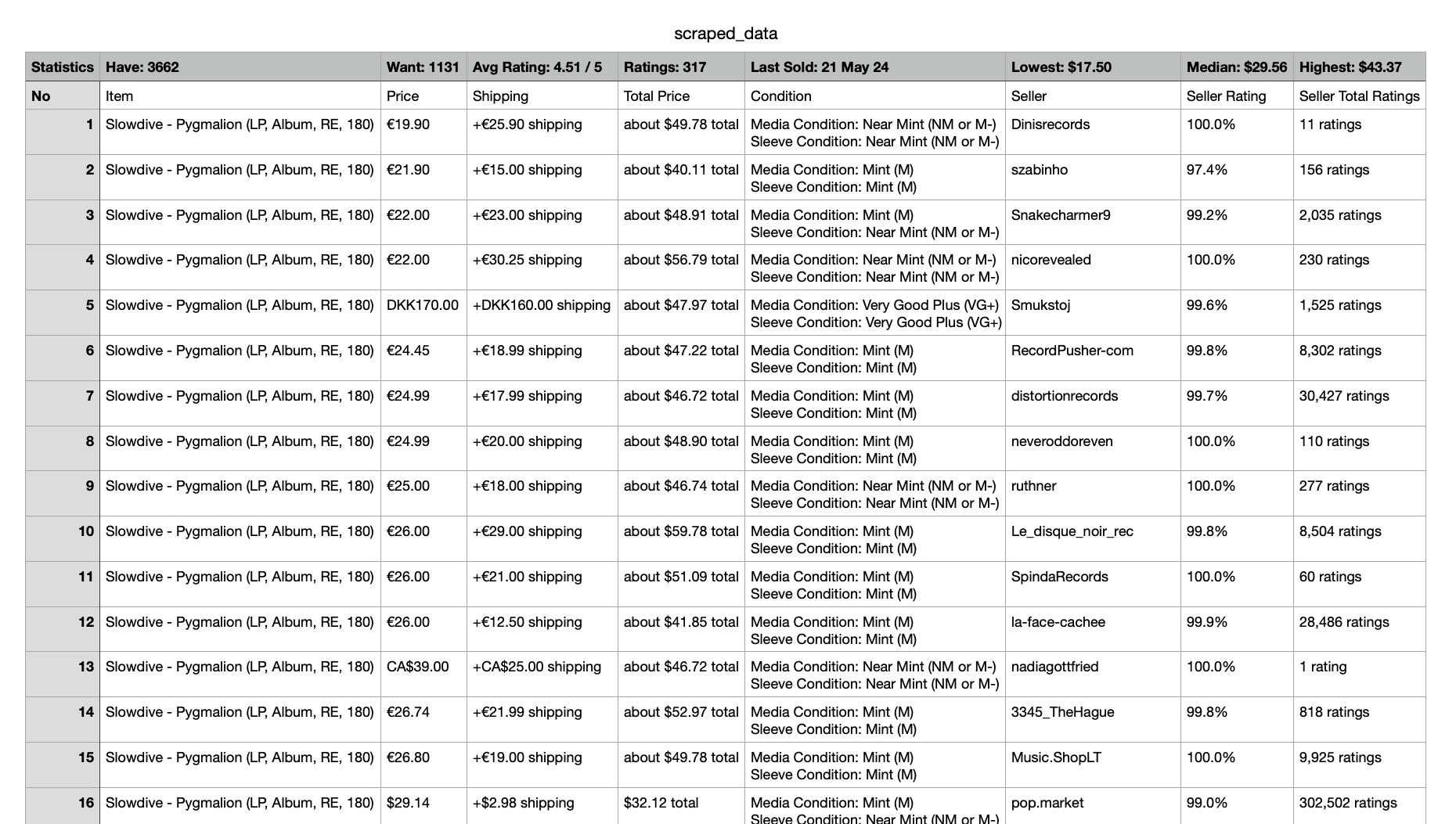

Save the data to a CSV file

Finally, let’s save the scraped data to a CSV file. This last part of the code writes the statistics data into the first row, adds headers for the item data columns, writes each item’s details, then saves this data to a CSV file in your specified directory, and confirms that this action was successful in the terminal.

# Save the data to a CSV filedef save_to_csv(statistics_text, scraped_data, csv_file_path):with open(csv_file_path, mode='w', newline='', encoding='utf-8') as file:writer = csv.writer(file)# Write the statistics data in a single row if availableif statistics_text:writer.writerow(['Statistics'] + statistics_text.split('\n'))# Write headers for the item datafieldnames = ['No', 'Item', 'Price', 'Shipping', 'Total Price', 'Condition', 'Seller', 'Seller Rating', 'Seller Total Ratings']writer.writerow(fieldnames)# Write item datafor i, row in enumerate(scraped_data, start=1):row_with_num = [i] + [row[field] for field in fieldnames[1:]]writer.writerow(row_with_num)csv_file_path = "/path/to/your/destination/scraped_data.csv"save_to_csv(statistics_text, scraped_data, csv_file_path)print(f"Data saved to {csv_file_path}")

The entire Discogs marketplace scraper code & the result

Congrats on building your Discogs marketplace scraper! Don’t forget to replace the two placeholders in this code (the path to your proxy.zip file and the directory where you’d like the scraped data csv file to be saved) and add or remove functionalities according to your needs. Save the code with the .py file extension and run it in your terminal using the command "python" followed by the path to your script file or, if you’re running your terminal in that destination, simply "python script_name.py".

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.support.ui import WebDriverWaitfrom selenium.webdriver.support import expected_conditions as ECimport mathimport csv# Setup Chrome options with proxyoptions = webdriver.ChromeOptions()options.add_extension("/path/to/your/destination/proxy.zip")driver = webdriver.Chrome(options=options)# Discogs marketplace URL to scrapeurl = "https://www.discogs.com/sell/release/5297158?ev=rb"# Define custom headersheaders = {"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36","Accept-Language": "en-US","Referer": "https://www.discogs.com/"}# Open the initial URL to set the correct domaindriver.get(url)# Add custom cookiescookies = [{"name": "currency", "value": "USD", "domain": ".discogs.com"},{"name": "__hssrc", "value": "1", "domain": ".discogs.com"}]for cookie in cookies:driver.add_cookie(cookie)# Add custom headersdriver.execute_cdp_cmd('Network.setExtraHTTPHeaders', {'headers': headers})# Function to accept cookiesdef close_cookies_banner(driver):try:cookies_button = WebDriverWait(driver, 10).until(EC.element_to_be_clickable((By.ID, "onetrust-accept-btn-handler")))cookies_button.click()except Exception:pass # Continue execution even if the cookies banner is not found# Function to get total number of pagesdef get_total_pages(driver):try:pagination_text = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, '#pjax_container > nav > form > strong'))).texttotal_items = int(pagination_text.split()[-1].replace(',', ''))items_per_page = 25total_pages = math.ceil(total_items / items_per_page)return total_pagesexcept Exception as e:print(f"Error extracting total pages: {e}")return 1 # Default to 1 page if extraction fails# Function to remove blank lines from textdef remove_blank_lines(text):return "\n".join([line for line in text.split("\n") if line.strip() != ""])# Function to scrape statisticsdef scrape_statistics(driver):try:statistics_element = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, '#statistics > div.section_content.toggle_section_content')))statistics_text = statistics_element.text.strip()print("Statistics:\n")print(statistics_text)print("\n")return statistics_textexcept Exception:return None# Function to scrape a pagedef scrape_page(driver, page_number, scraped_data):print(f"Scraping page {page_number}")try:# Wait for the page to load the itemsWebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, '#pjax_container > table > tbody > tr > td.item_description > strong > a')))# Scrape the dataitem_elements = driver.find_elements(By.CSS_SELECTOR, '#pjax_container > table > tbody > tr')for item in item_elements:try:item_data = {}item_data['Item'] = item.find_element(By.CSS_SELECTOR, 'td.item_description > strong > a').textitem_data['Price'] = item.find_element(By.CSS_SELECTOR, 'td.item_price.hide_mobile > span.price').text.strip()shipping_element = item.find_elements(By.CSS_SELECTOR, 'td.item_price.hide_mobile > span.hide_mobile.item_shipping')item_data['Shipping'] = shipping_element[0].text.strip() if shipping_element else 'N/A'total_price_element = item.find_elements(By.CSS_SELECTOR, 'td.item_price.hide_mobile > span.converted_price')item_data['Total Price'] = total_price_element[0].text.strip() if total_price_element else 'N/A'condition_element = item.find_elements(By.CSS_SELECTOR, 'td.item_description > p.item_condition')item_data['Condition'] = remove_blank_lines(condition_element[0].text) if condition_element else 'N/A'seller_element = item.find_elements(By.CSS_SELECTOR, 'td.seller_info > ul > li > div > strong > a')item_data['Seller'] = seller_element[0].text.strip() if seller_element else 'N/A'rating_element = item.find_elements(By.CSS_SELECTOR, 'td.seller_info > ul > li > strong')item_data['Seller Rating'] = rating_element[0].text.strip() if rating_element else 'N/A'total_rating_element = item.find_elements(By.CSS_SELECTOR, 'td.seller_info > ul > li > a')item_data['Seller Total Ratings'] = total_rating_element[0].text.strip() if total_rating_element else 'N/A'# Check for new sellernew_seller_element = item.find_elements(By.CSS_SELECTOR, 'td.seller_info > ul > li > span')if new_seller_element and 'New Seller' in new_seller_element[0].text:item_data['Seller Rating'] = 'New Seller'item_data['Seller Total Ratings'] = 'N/A'print(f"\n"f"Item: {item_data['Item']}\n"f"Price: {item_data['Price']}\n"f"Shipping: {item_data['Shipping']}\n"f"Total Price: {item_data['Total Price']}\n"f"Condition: {item_data['Condition']}\n"f"Seller: {item_data['Seller']}\n"f"Seller's Rating: {item_data['Seller Rating']}\n"f"Seller's Total Ratings: {item_data['Seller Total Ratings']}\n")scraped_data.append(item_data)except Exception as e:print(f"Error extracting data for an item on page {page_number}: {e}")except Exception as e:print(f"Error while scraping page {page_number}: {e}")# Initialize lists to store scraped data and statisticsscraped_data = []statistics_text = Nonetry:# Open the initial URL to set the correct domaindriver.get(url)# Try to close cookies banner, if presentclose_cookies_banner(driver)# Scrape statistics on the first pagestatistics_text = scrape_statistics(driver)# Get the total number of pages available for scrapingtotal_pages = get_total_pages(driver)print(f"Total pages: {total_pages}")print(f"\n")# Ask the user how many pages they want to scrapenum_pages_to_scrape = int(input(f"How many pages do you want to scrape (1-{total_pages})? "))if num_pages_to_scrape > total_pages:print(f"You've requested more pages than available. Scraping {total_pages} pages instead.")num_pages_to_scrape = total_pages# Loop through the specified number of pages and scrape data from eachfor page_number in range(1, num_pages_to_scrape + 1):current_url = f"{url}&page={page_number}" if page_number > 1 else urldriver.get(current_url)scrape_page(driver, page_number, scraped_data)finally:# Close the browserdriver.quit()# Save the data to a CSV filedef save_to_csv(statistics_text, scraped_data, csv_file_path):with open(csv_file_path, mode='w', newline='', encoding='utf-8') as file:writer = csv.writer(file)# Write the statistics data in a single row if availableif statistics_text:writer.writerow(['Statistics'] + statistics_text.split('\n'))# Write headers for the item datafieldnames = ['No', 'Item', 'Price', 'Shipping', 'Total Price', 'Condition', 'Seller', 'Seller Rating', 'Seller Total Ratings']writer.writerow(fieldnames)# Write item datafor i, row in enumerate(scraped_data, start=1):row_with_num = [i] + [row[field] for field in fieldnames[1:]]writer.writerow(row_with_num)csv_file_path = "/path/to/your/destination/scraped_data.csv"save_to_csv(statistics_text, scraped_data, csv_file_path)print(f"Data saved to {csv_file_path}")

After running this code, the script will initiate a browser instance, display the statistics of the record you’re scraping, indicate how many pages there are, ask for your input on how many pages it should scrape, provide the record data in the terminal, save it to a CSV file, and confirm that the file was saved in your defined directory.

In the saved CSV file, the first row contains the statistics of the specific record, followed by the column titles and scraped data.

Looking at the data in the screenshot, you can clearly see that if you, for example, wish to buy a Pygmalion record in mint condition at the lowest price, item number 2 is the most affordable option. This is just one of many ways to utilize the gathered data to your advantage, so have fun with it!

Wrapping up

Following the steps above, you can efficiently gather and analyze Discogs marketplace data to better understand the vinyl record market. We hope that you’ll successfully adjust the code as needed for your specific purposes and gain invaluable insights to make informed decisions on purchases and sales. And don't forget the essential role of proxies that will ensure your projects flow smoothly and uninterrupted.

About the author

Dominykas Niaura

Copywriter

As a fan of digital innovation and data intelligence, Dominykas delights in explaining our products’ benefits, demonstrating their use cases, and demystifying complex tech topics for everyday readers.

All information on Smartproxy Blog is provided on an "as is" basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Smartproxy Blog or any third-party websites that may be linked therein.